Database Normalization and Performance: Theory, Practice & Real-World Impact

Database design is one of the most critical steps in software architecture. A well-designed database ensures not only data consistency and integrity, but also significantly impacts long-term application performance. In this post, we'll explore what database normalization is, the problems it solves, and how it affects performance in real-world systems.

1. What is Database Normalization?

Normalization is a process used in relational database design to organize data efficiently, reduce redundancy, and prevent anomalies during data operations. The core purpose is to structure data so that each piece of information is stored only once, minimizing data repetition and maintaining data integrity.

Main Goals

- Eliminate redundant data

- Increase data consistency

- Ensure data integrity

- Prevent update, insert, and delete anomalies

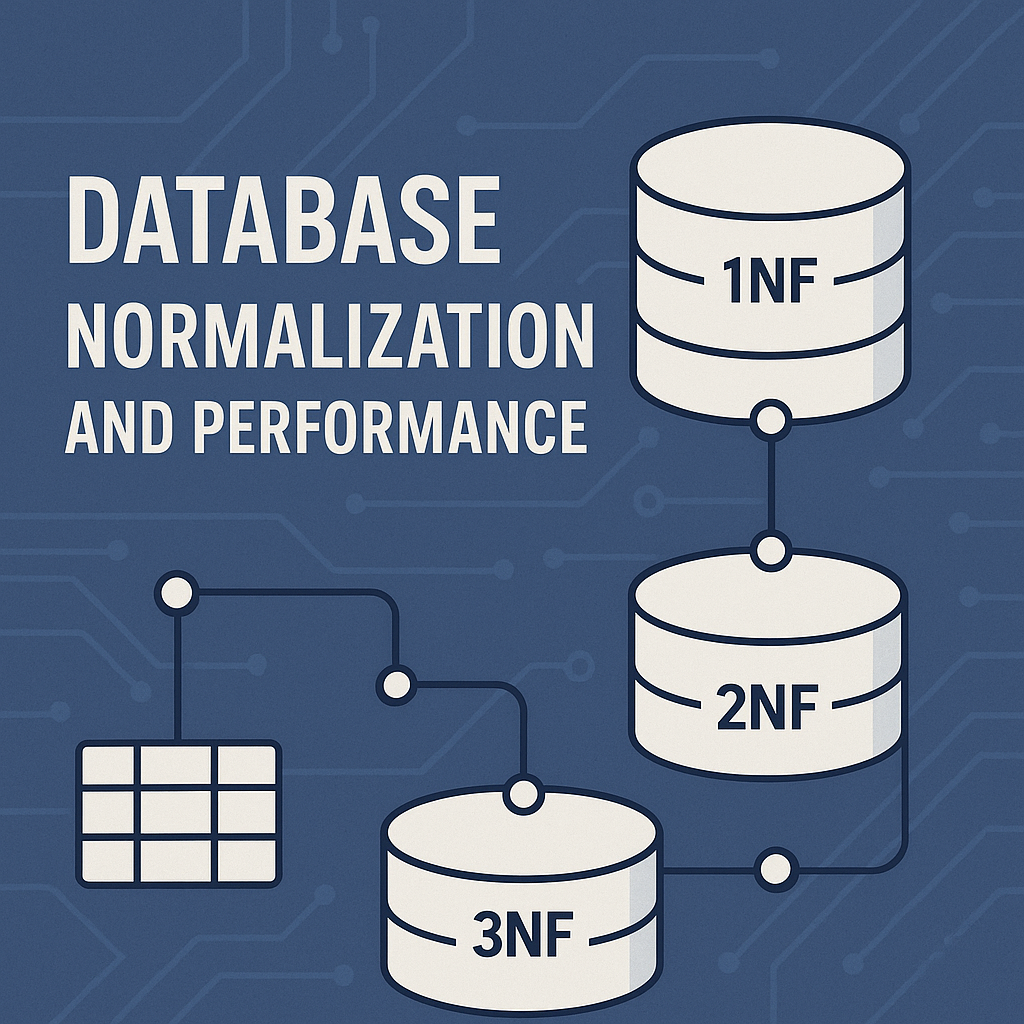

2. Stages of Normalization

Normalization is achieved by organizing tables into certain normal forms (NF), each eliminating specific types of redundancy and dependency. The most widely used are:

- First Normal Form (1NF):

Every cell contains a single value; no repeating groups or arrays. Data is atomic. - Second Normal Form (2NF):

Meets 1NF. All non-key attributes are fully dependent on the entire primary key—removes partial dependencies. - Third Normal Form (3NF):

Meets 2NF. No transitive dependencies between non-key attributes.

Advanced forms such as Boyce–Codd Normal Form (BCNF), 4NF, and 5NF exist for highly complex systems, but most practical applications stop at 3NF or BCNF.

3. The Benefits of Normalization

- Reduces data duplication: Each fact is stored in one place.

- Prevents inconsistencies: Changes to data propagate automatically.

- Smaller table sizes: Queries and indexes operate more efficiently.

- Prevents anomalies: Update, insert, and delete operations are safer and more predictable.

4. Practical Example: Before and After Normalization

Let’s consider a simple student-course database table:

Before Normalization:

| StudentID | StudentName | Course | Instructor |

|---|---|---|---|

| 1 | Alice | Math | Smith |

| 1 | Alice | Physics | Miller |

| 2 | Bob | Math | Smith |

Problems:

- Data repetition (e.g., Alice and Smith appear multiple times)

- Updating an instructor’s name requires multiple changes

- Deleting a student’s last course removes all student info

After Normalization:

Students

| StudentID | StudentName |

|---|---|

| 1 | Alice |

| 2 | Bob |

Courses

| Course | Instructor |

|---|---|

| Math | Smith |

| Physics | Miller |

Enrollments

| StudentID | Course |

|---|---|

| 1 | Math |

| 1 | Physics |

| 2 | Math |

Advantages:

- Each fact is stored once

- Updating instructor info happens in one place

- Student data and enrollment data are independent

5. How Normalization Affects Performance

Positive Impacts

- Smaller, optimized tables: Faster queries and index scans.

- Consistency: Data integrity checks are easier and updates are reliable.

- Efficient indexing: Normalized tables often have better-performing indexes.

Potential Downsides

- Increased JOINs: To assemble complete data, queries often require joining multiple tables, which can slow down performance for large datasets.

- Complex queries: Highly normalized structures can make SELECT statements more complex and slower.

- Real-time reads: Systems requiring extremely fast read performance (especially reporting/analytics) may benefit from denormalized structures.

6. Denormalization: The Performance Trade-Off

In certain scenarios, especially for read-heavy or analytical systems, denormalization is deliberately used to improve performance.

For example:

- Storing frequently joined data in a single table

- Creating summary or reporting tables

Trade-off:

While denormalization speeds up reads, it introduces data redundancy and the risk of inconsistencies. Updating duplicated data becomes more complex and must be handled with care.

7. Striking a Balance: OLTP vs. OLAP Systems

- OLTP (Online Transaction Processing):

Systems such as banking or e-commerce, which are write-heavy and require consistency, benefit from normalized databases. - OLAP (Online Analytical Processing):

Analytical/reporting systems often prefer denormalized models (like star or snowflake schemas) for fast reads and aggregate queries.

8. Conclusion & Best Practices

- Normalization is essential for data integrity and maintainability in most transactional systems.

- Over-normalization can hurt performance—always consider real-world usage and access patterns.

- Use indexes, query optimization, and when needed, careful denormalization to balance data integrity with performance.

- There’s no one-size-fits-all; the right design depends on your application’s unique needs.