Why Use ClickHouse for Log Storage?

In my journey as a software developer, managing log data has always been a crucial task, one that demanded an effective solution. Logs grow rapidly, and the need for quick analysis is constantly increasing. After exploring traditional databases and various log management tools, I finally discovered ClickHouse.

ClickHouse addressed most of the challenges I encountered with log management and even exceeded my expectations. Its blazing-fast query performance, high write speeds, and powerful compression capabilities made it the ideal choice. Additionally, its scalability and cost-effectiveness for storing log data solidified my decision.

In this article, I’ll share why ClickHouse is such a powerful tool for log management, based on my own experience.

What is ClickHouse?

ClickHouse is an open-source, columnar database developed by Yandex. Its columnar storage format is optimized for big data analytics, making it ideal for high-speed data writing and reading operations. These characteristics make ClickHouse an excellent option for storing and analyzing logs.

Advantages of ClickHouse for Log Storage

- High-Performance Query Processing: ClickHouse is renowned for its ability to run high-speed queries on large datasets. Its columnar storage allows it to read only the relevant columns, boosting performance and accelerating data retrieval. This means queries on massive log files can return results in seconds.

- Fast Write Speeds: Log data grows quickly and requires continuous writing. ClickHouse’s ability to handle high-speed data ingestion makes it perfect for real-time log data streams. This allows you to store logs without delay and analyze them swiftly.

- Data Compression: ClickHouse offers powerful compression algorithms, enabling efficient storage of large volumes of log data. This reduces storage costs while also speeding up data access. ClickHouse can automatically select the best compression method, or you can specify it manually.

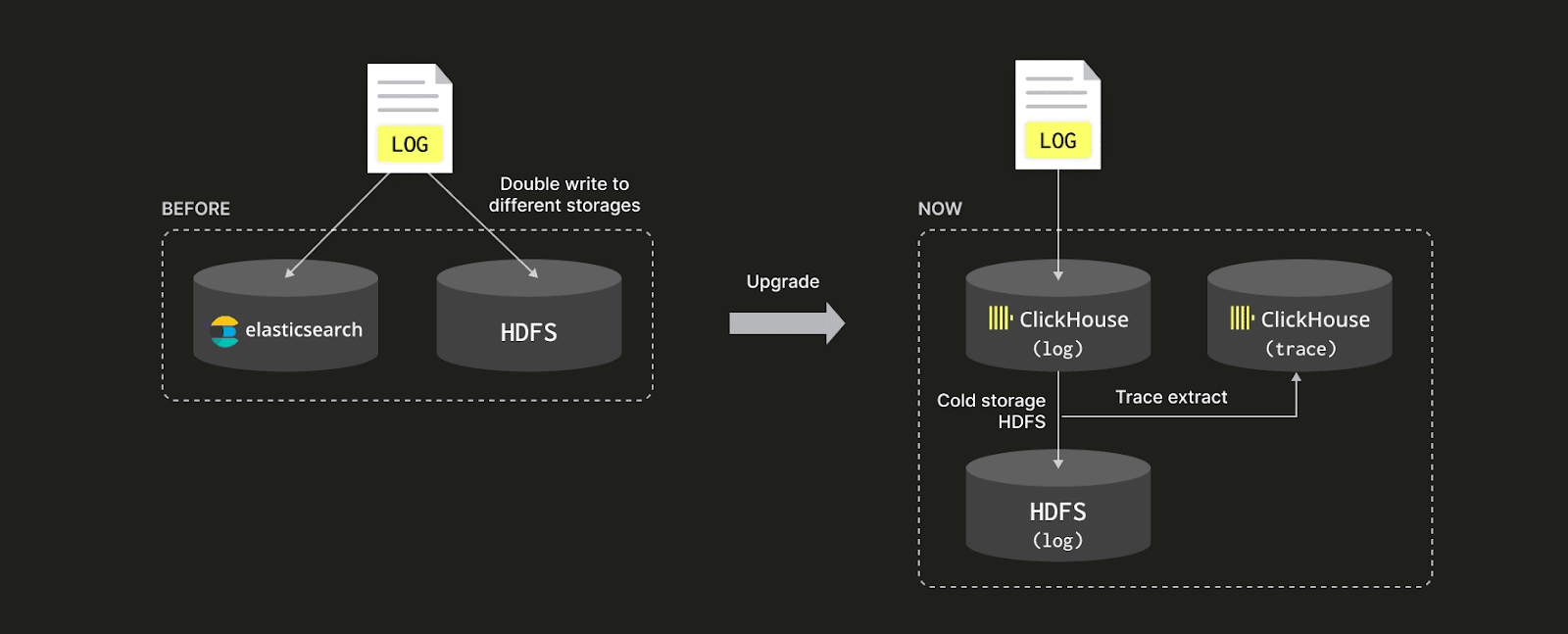

- Easy Scalability: As log data grows, scalability becomes essential. ClickHouse’s distributed architecture allows for seamless scaling. With horizontal scaling, you can add more servers as your data grows, ensuring performance remains optimal.

- Real-Time Analytics and Data Processing: ClickHouse’s rapid data processing capabilities allow you to perform real-time analysis on log data. Instant queries and analytics let you quickly extract insights and respond to system issues as they arise.

Creating a Log Table in ClickHouse and Sending Data Using Golang

Sending log data to ClickHouse is straightforward. First, we’ll create a table to store the log data, incorporating daily partitioning to optimize query performance. Then, I’ll provide an example of how to insert data into this table using Golang.

Step 1: Creating a Log Table with Daily Partitioning

Connect to ClickHouse and run the following SQL command to create a simple log table. This table will store logs with a timestamp, log level, and message content, partitioned by day:

CREATE TABLE logs (

timestamp DateTime,

level String,

message String

) ENGINE = MergeTree()

PARTITION BY toYYYYMMDD(timestamp)

ORDER BY timestamp;

In this table:

- PARTITION BY toYYYYMMDD(timestamp): Partitions the data by day, storing each day’s logs in a separate partition. This improves data management and query performance, especially for time-based queries.

- ORDER BY timestamp: Orders the data by timestamp, which enhances performance for time-based retrieval.

Step 2: Sending Log Data with Golang

Below is an example of how to send data to this table using Golang. We’ll use the github.com/ClickHouse/clickhouse-go/v2 package. To add this package to your project, run:

go get github.com/ClickHouse/clickhouse-go/v2

Here’s the Golang code:

package main

import (

"context"

"log"

"time"

"github.com/ClickHouse/clickhouse-go/v2"

)

func main() {

// Establish a connection to ClickHouse

conn, err := clickhouse.Open(&clickhouse.Options{

Addr: []string{"localhost:9000"},

Auth: clickhouse.Auth{

Database: "default",

Username: "default",

Password: "",

},

})

if err != nil {

log.Fatal("Connection failed:", err)

}

// Test the connection

if err := conn.Ping(context.Background()); err != nil {

log.Fatal("Ping failed:", err)

}

// Insert log data

batch, err := conn.PrepareBatch(context.Background(), "INSERT INTO logs (timestamp, level, message)")

if err != nil {

log.Fatal("Prepare batch failed:", err)

}

// Example log data

timestamp := time.Now()

level := "INFO"

message := "This is a test log message."

if err := batch.Append(timestamp, level, message); err != nil {

log.Fatal("Append failed:", err)

}

if err := batch.Send(); err != nil {

log.Fatal("Send failed:", err)

}

log.Println("Log successfully inserted.")

}

In this code:

- Connecting to ClickHouse: The

clickhouse.Openfunction connects to ClickHouse, where you set the connection details (address, database name, username, password). - Inserting Log Data: Using the

PrepareBatchandAppendfunctions, you can insert log data into the table, then send it with theSendfunction.

By following these steps, you can store log data from your Golang application directly into ClickHouse and leverage its performance for quick log analysis and system monitoring.

You can explore the ClickHouse website at the following link.